In the real estate business, it’s often said the three most important things are location, location, and location. The same can be said for where security practitioners opt to capture network packets for analysis. Today I’ll quickly examine the difference between a pair of deployments along with their strengths and weaknesses. I’m using a “import only” installation of Security Onion 2.4.60 to quickly perform my analysis, taking care to flush NSM an elastic data between the public and private examinations.

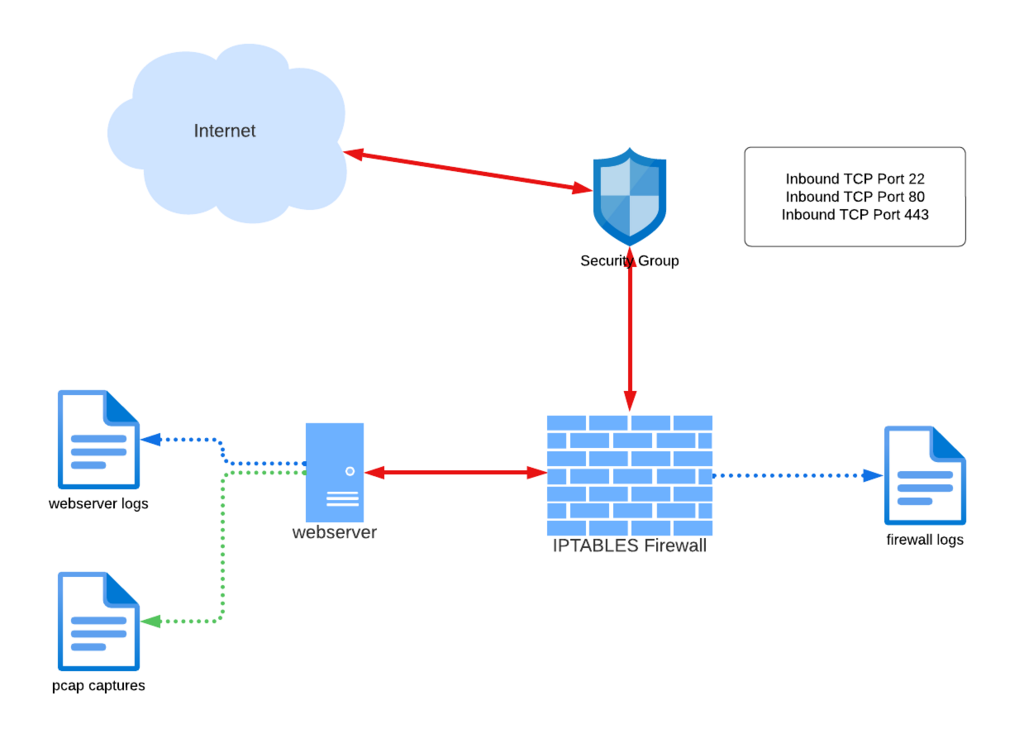

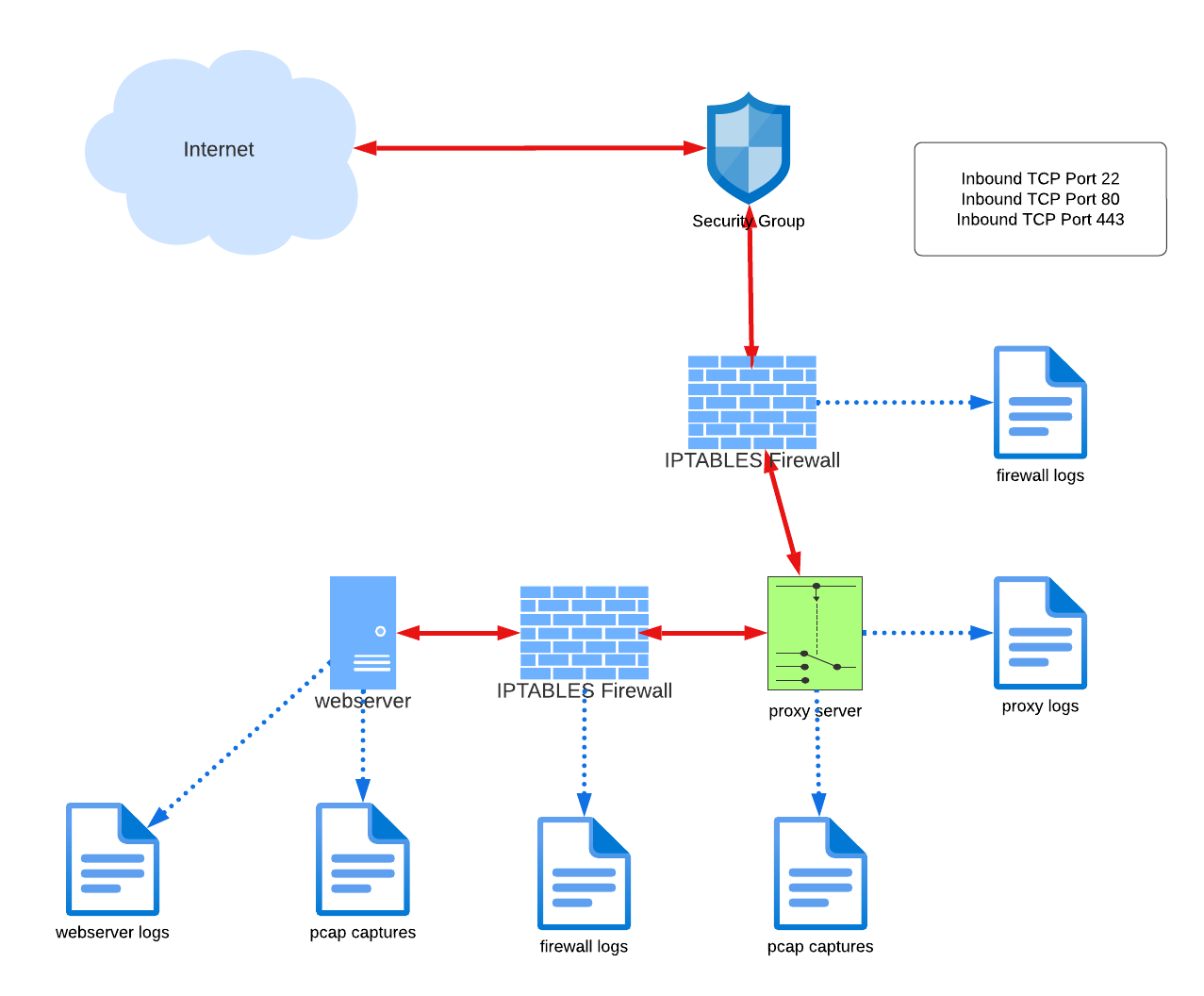

Consider this pre-existing network deployment in AWS. A bastion host protected by a Security Group and local IPTABLES firewall. While typical of the 1999-2012 era of Internet hosting, it leaves quite a bit to be desired.

While the pre-existing network deployment inherits several problems it is still a fairly well-hardened network. The network security group allows public access to only three inbound TCP ports, blocking all others. Remote access via SSH on TCP 22 is tightly restricted by source IP address while http/https traffic is wide open. The local IPTABLES firewall does a fair amount of heavy lifting utilizing several public real-time block lists and provides logging for analysis. This design also provides full packet capture at the server interface, while the webserver is generating useful logging.

The primary issues with this deployment included:

- Bastion hosts directly accessible on the Internet come with problems too innumerable to list here.

- Secure https traffic to/from the webserver is unobservable. The webserver logs provide a glimpse into the connections that pass the firewall test, but don’t provide a complete picture for analyzing attacks.

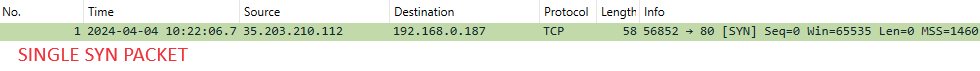

- Packets that are blocked by the local IPTABLES firewall are observed by the packet capture BEFORE arriving at the Linux kernel and as such are always logged. When analyzed against signature-based intrusion detection they will alert on a single inbound packet with no response – a false positive. It’s noisy.

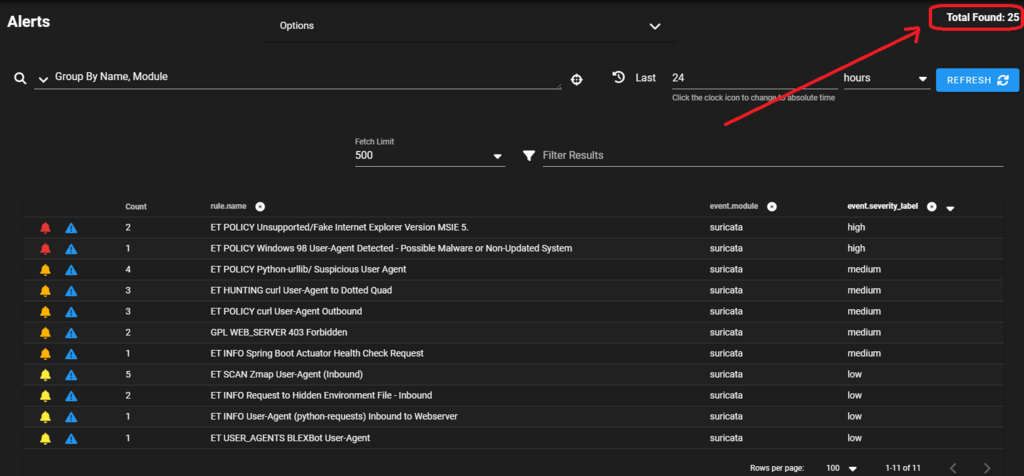

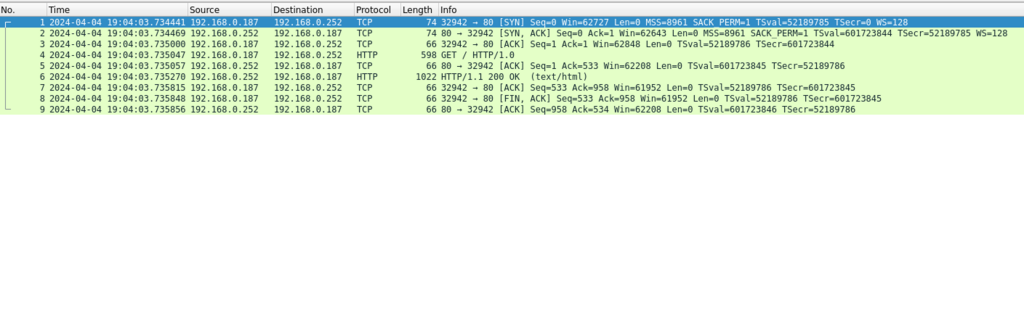

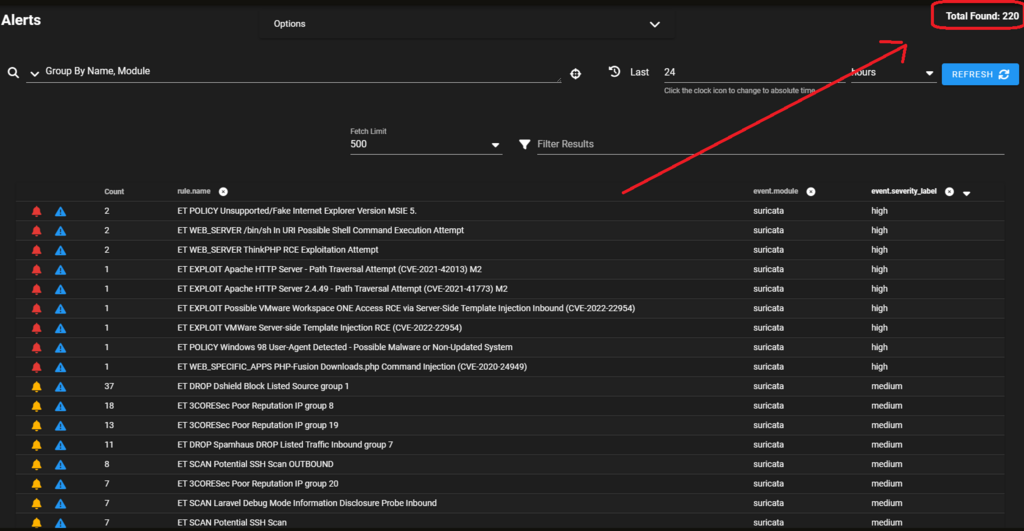

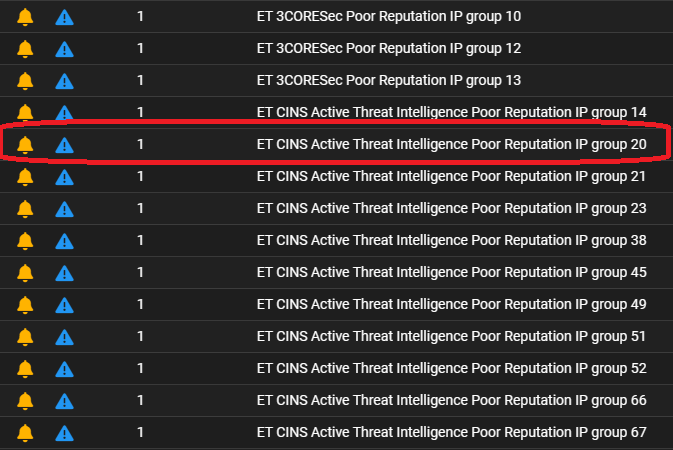

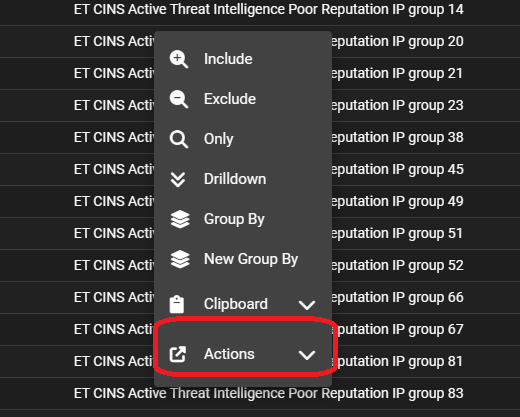

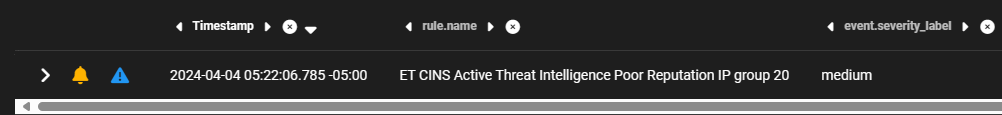

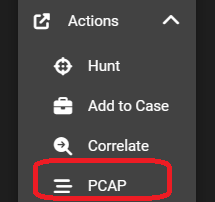

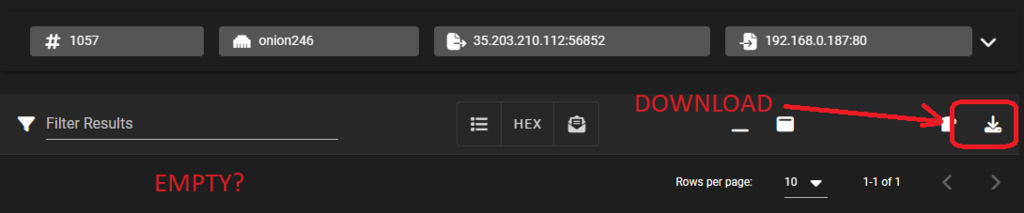

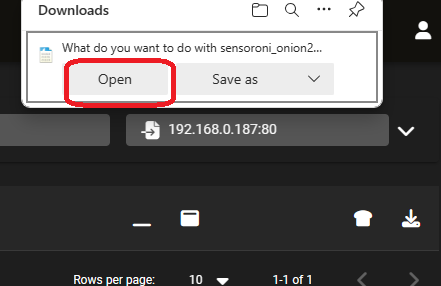

Seeing is believing. The slides below reveal 220 alerts for a 24-hour period in this posture. A significant number are based on reputation lists that have actually been blocked by the firewall. We drilldown on one at random to reveal a single record. Using the PCAP action we can view the traffic. But wait, why is the conversation empty? We download the PCAP and open it with Wireshark and verify it’s because there is no conversation – only a single inbound SYN packet that was dropped.

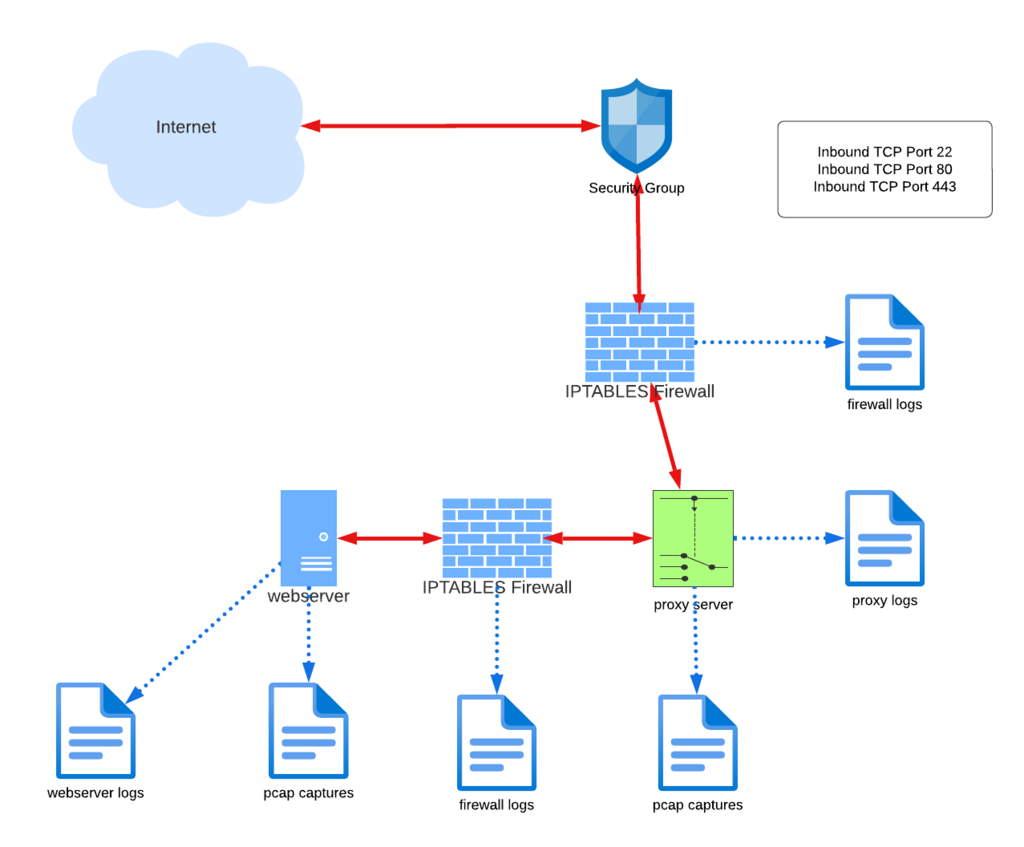

Examining all 220 alerts for the preceding twenty-four hours is a time-consuming process, and we needn’t be chasing our tails on blocked inbound SYN packets. Redeploying with the webserver nestled behind a proxy will allow us to split the horizon and capture packets both in front of and behind the firewall.

Segregating “public” and “private” packet capture allows separation of analysis. As you can see here, this reduces the active number of alerts for the same twenty-four-hour period to twenty-five. This volume is far more manageable.

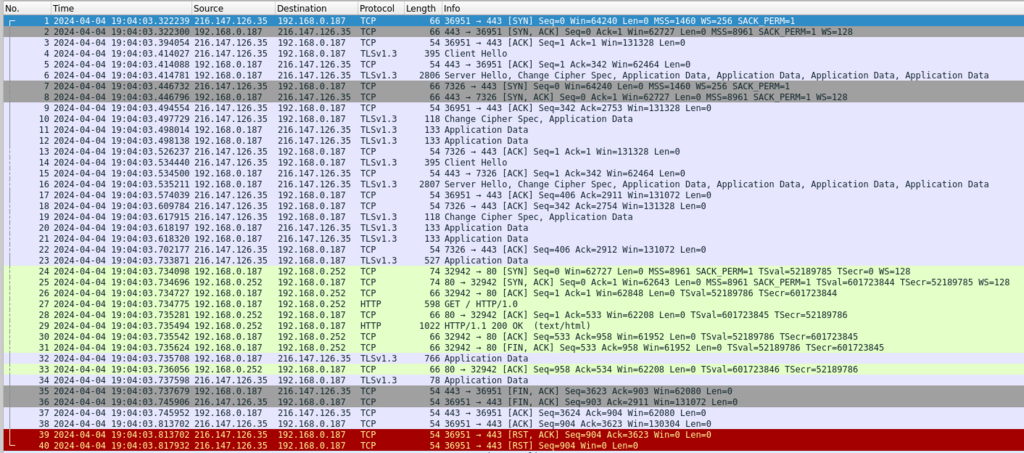

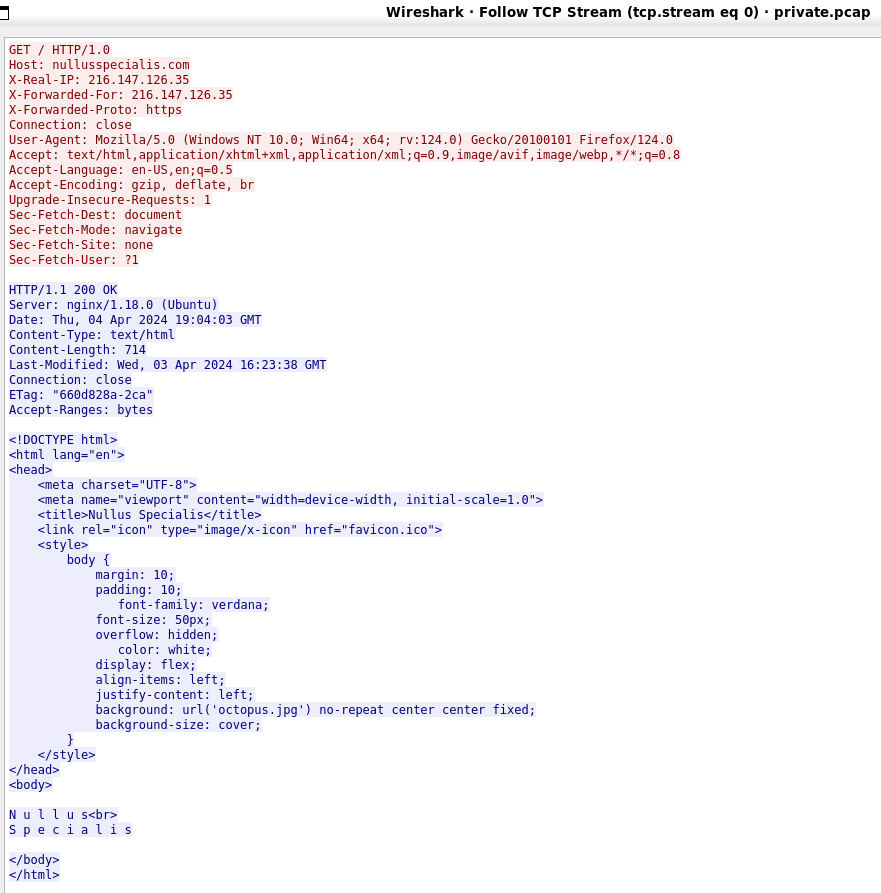

And what of our desire to examine http traffic? Formerly our TLS certificate was preventing this without jumping through some pretty crazy hoops. The simplicity of this deployment is the use of a TLS certificate on the public side of the proxy server, while communicating with the actual web server unencrypted on TCP port 80. Take a look at the next pair of screen captures.

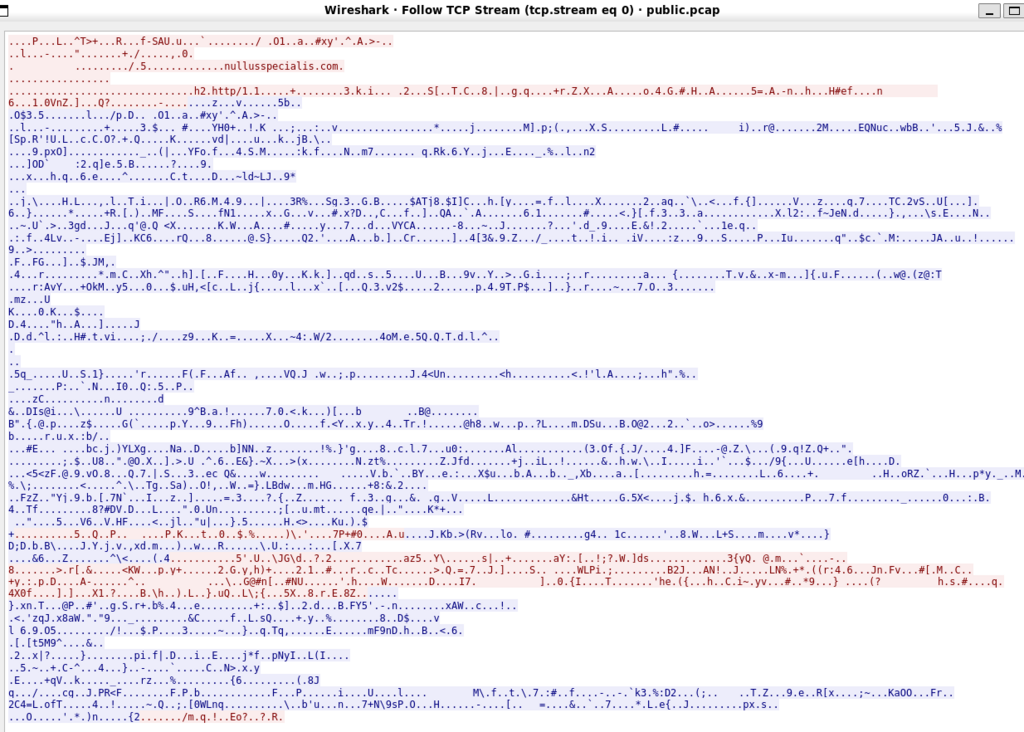

In our first screen capture we see the results of my having executed tcpdump with the “not tcp port 22” filter. I engage the server with a browser over https and perform a single page load. Using Wireshark’s “follow TCP Stream” on the first packet we can see the conversation is encrypted, save only the domain name.

We began with a single firewall log, a single webserver log, and full packet capture log. Implementing the new design essentially doubles this to two firewall logs, a proxy log to correlate with the web server log, and two full packet capture logs.

Leave a Reply

You must be logged in to post a comment.