If you’re an old BASH head like I am, you’ve probably leaned on a FOR loop countless times. It’s become natural enough over the years to just whip one up on the command line, and I use it in scripting every day.

Dear Bash FOR loop,

You have indeed been trusty and true for many years, and I’ll always be appreciative of the support you provided me. Recently my new friend Python introduced me to Thread Pools… I’m sure you can see where this is going.

It’s me, not you. </3

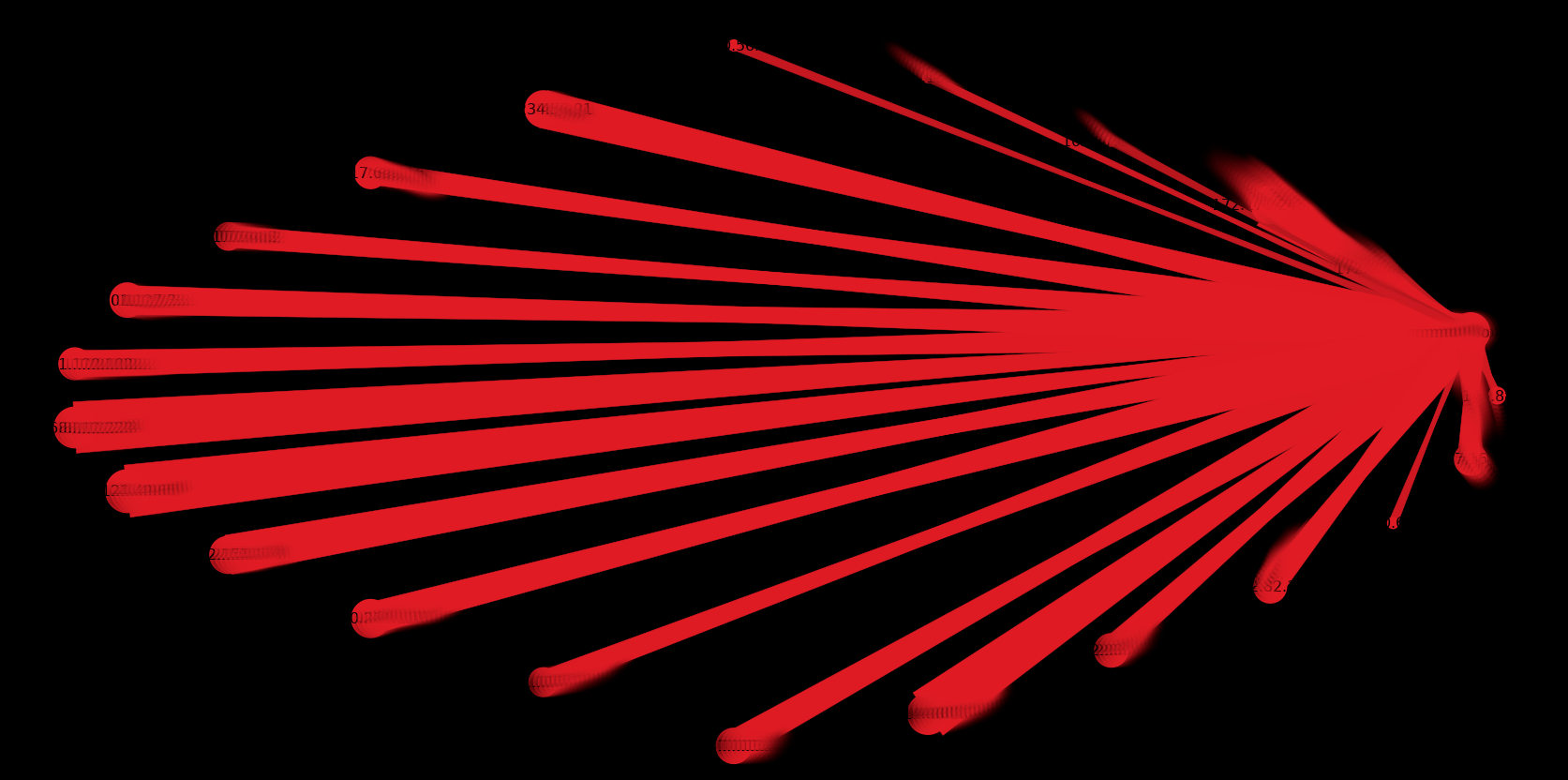

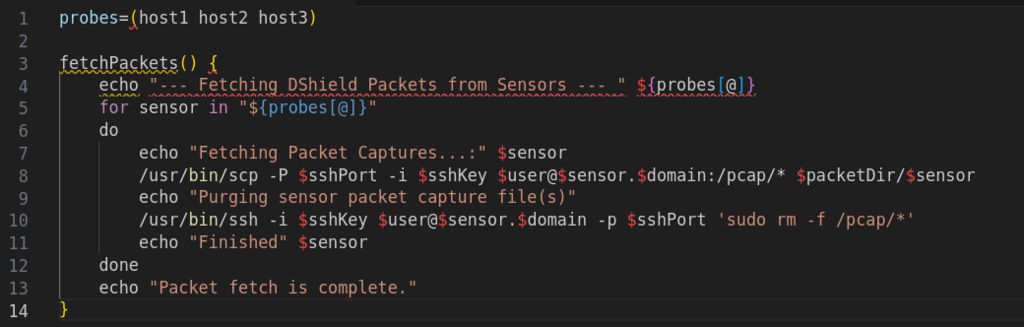

One such usage in my daily activities involves fetching log and/or packet capture files from a number of hosts spread across the globe. The lack of efficiency wasn’t readily apparent when the list of hosts was short. Take for example the code snippet below. For the three hosts in the array (hosts 1-3) the loop is executed, and files are snatched. A pretty way to visualize this is to pipe a packet capture through EtherApe.

So the image above is exemplary of connecting to one host, fetching files, and then moving on to another host. How can I improve this and get some of my day back? Enter your new friend Python.

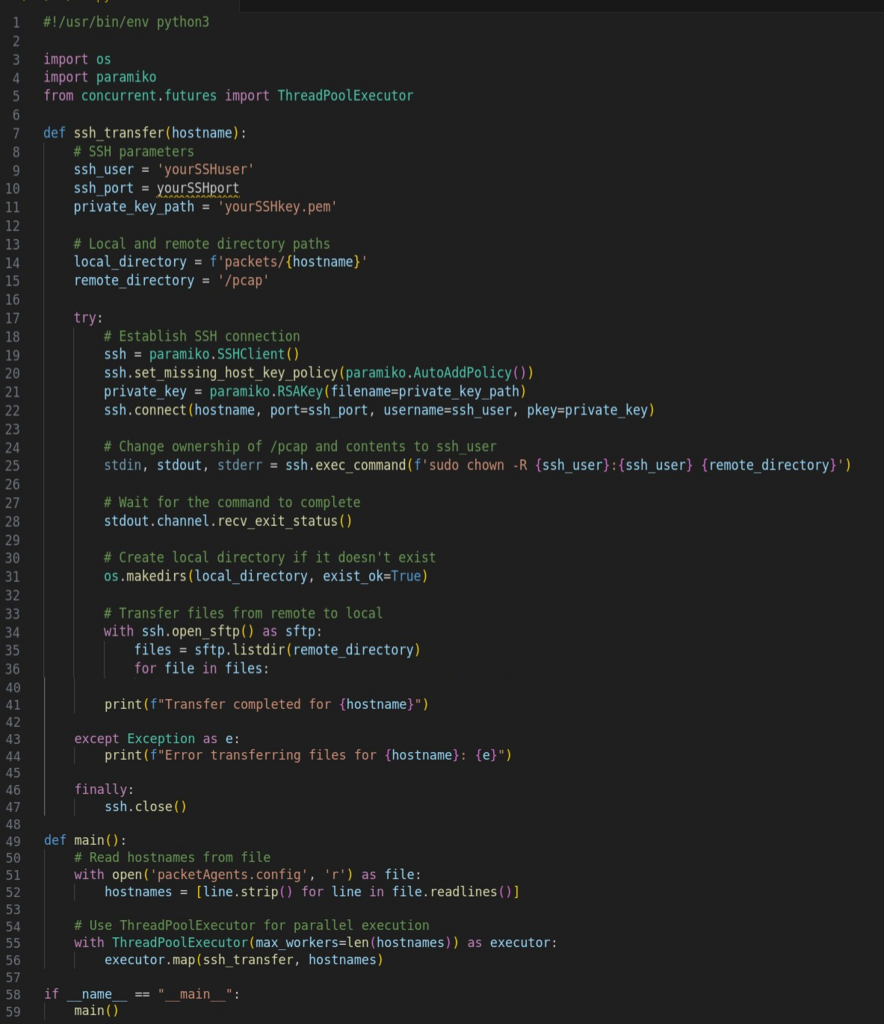

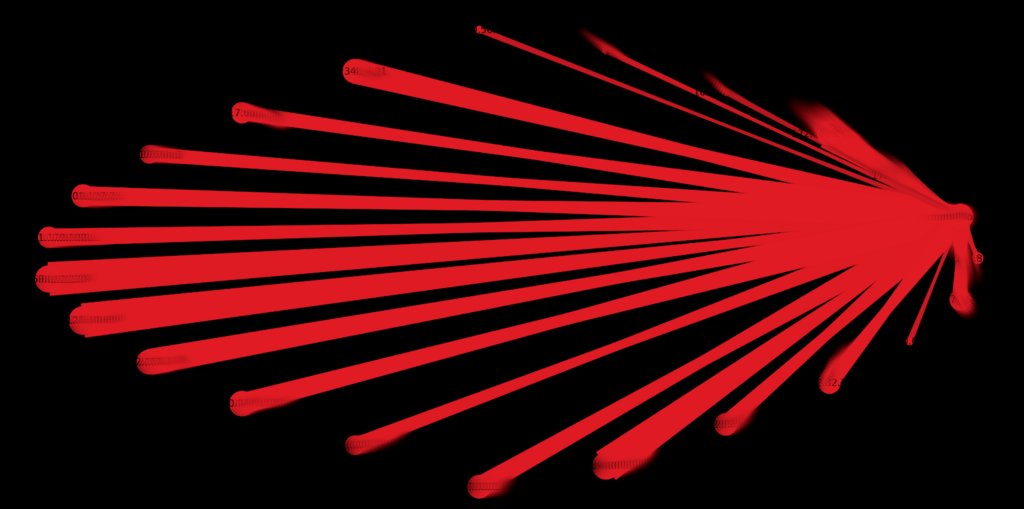

This script will establish an SSH connection for each hostname, change ownership of /pcap and its contents to the defined user using sudo, and then transfer the files to the local directory. The connections are executed in parallel for each hostname. To prevent disconnects or broken pipes due to large file transfers, you can adjust the ServerAliveInterval and ServerAliveCountMax parameters in the ssh_config file. Additionally, you can use the get_pty parameter in SSHClient.exec_command to allocate a pseudo-terminal. What we end up with in this case is twenty-four distinct parallel executions of the file transfer. Time to completion will of course depend upon a host of things, such as file size, network path, etc.

The time difference in execution is like comparing apples to freight trains. I’m out of my depth for this kind of math but believe it would be expressed as a ratio. The longer the list of hosts becomes the less efficient the serial copy method becomes – the candle burns at both ends.

I’m fairly new to Python. I HIGHLY recommend Mark Baggett’s SEC573: Automating Information Security with Python for jump-starting your Python coding career.

BASH Example:

probes=(host1 host2 host3)

fetchPackets() {

echo "--- Fetching Packets --- " ${probes[@]}

for sensor in "${probes[@]}"

do

echo "Fetching Packet Captures...:" $sensor

/usr/bin/scp -P $sshPort -i $sshKey $user@$sensor.$domain:/pcap/* $packetDir/$sensor

echo "Purging sensor packet capture file(s)"

/usr/bin/ssh -i $sshKey $user@$sensor.$domain -p $sshPort 'sudo rm -f /pcap/*'

echo "Finished" $sensor

done

echo "Packet fetch is complete."

}Python Example:

#!/usr/bin/env python3

import os

import paramiko

from concurrent.futures import ThreadPoolExecutor

def ssh_transfer(hostname):

# SSH parameters

ssh_user = 'yourSSHuser'

ssh_port = yourSSHport

private_key_path = 'yourSSHkey.pem'

# Local and remote directory paths

local_directory = f'packets/{hostname}'

remote_directory = '/pcap'

try:

# Establish SSH connection

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

private_key = paramiko.RSAKey(filename=private_key_path)

ssh.connect(hostname, port=ssh_port, username=ssh_user, pkey=private_key)

# Change ownership of /pcap and contents to ssh_user

stdin, stdout, stderr = ssh.exec_command(f'sudo chown -R {ssh_user}:{ssh_user} {remote_directory}')

# Wait for the command to complete

stdout.channel.recv_exit_status()

# Create local directory if it doesn't exist

os.makedirs(local_directory, exist_ok=True)

# Transfer files from remote to local

with ssh.open_sftp() as sftp:

files = sftp.listdir(remote_directory)

for file in files:

remote_file_path = f'{remote_directory}/{file}'

local_file_path = f'{local_directory}/{file}'

sftp.get(remote_file_path, local_file_path)

print(f"Transfer completed for {hostname}")

except Exception as e:

print(f"Error transferring files for {hostname}: {e}")

finally:

ssh.close()

def main():

# Read hostnames from file

with open('packetAgents.config', 'r') as file:

hostnames = [line.strip() for line in file.readlines()]

# Use ThreadPoolExecutor for parallel execution

with ThreadPoolExecutor(max_workers=len(hostnames)) as executor:

executor.map(ssh_transfer, hostnames)

if __name__ == "__main__":

main()